Is AI Advancing Faster than It’s Becoming Safe?

By admin | Nov 21, 2024 | 4 min read

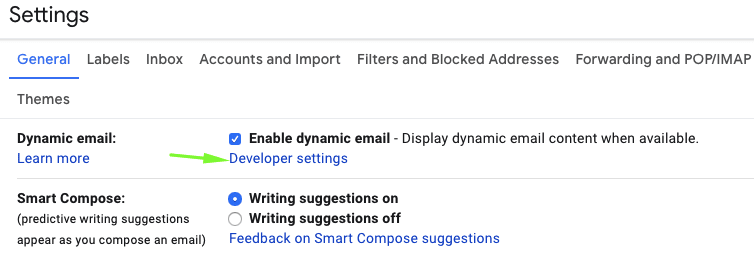

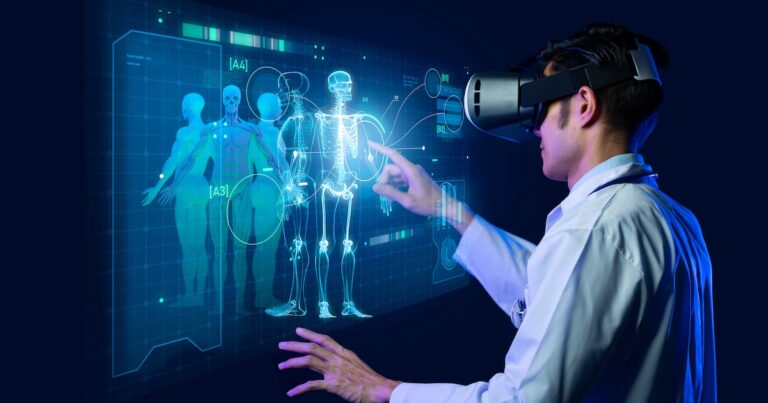

With rapid technological advances, AI now influences nearly every aspect of daily life. Our cars can park themselves and prevent collisions; email and messaging apps predict our next words; AI tools analyze MRI scans to detect early signs of disease; and tools like ChatGPT can help us write nearly anything. AI is fast, smart, and powerful. But is it safe?

As artificial intelligence increasingly shapes our lives, the question of who ensures it evolves responsibly is more pressing than ever. Northwestern University’s Center for Advancing Safety of Machine Intelligence (CASMI) aims to address this. In collaboration with the Digital Intelligence Safety Research Institute at Underwriters Laboratories Inc., CASMI is pioneering research to make AI safer and more equitable.

“We’re not only developing technology but also examining its impact to protect human safety,” says Kristian Hammond, a computer science expert and CASMI’s director. By focusing on human-centered AI practices, CASMI is setting standards to ensure that AI’s benefits extend to everyone.

Kristian Hammond

Bringing Responsibility into AI Development

CASMI’s research goes beyond building algorithms; it aims to deeply understand how AI interacts with people, society, and even our biases. The center’s researchers explore the underlying data, algorithms, and user interactions that drive machine learning systems. They work to establish guidelines that ensure these systems are not only effective but also safe and fair.

This commitment to responsible AI recently earned CASMI a 2024 Chicago Innovation Award for its significant contribution to ethical AI design. “AI’s promise is that it can amplify human capability,” says Hammond. “CASMI’s mission is to build the guardrails to make sure AI’s impact is positive and doesn’t compromise our well-being.”

CASMI’s research team Honored with 2024 Chicago Innovation Award

Demystifying AI in Our Daily Lives

When we think of AI, it might sound complex, but chances are you’ve already interacted with it today. From suggested texts on your phone to personalized recommendations on streaming platforms, AI is all around us. Its ability to predict our needs based on past behavior is impressive, but there are underlying risks that CASMI aims to mitigate.

Hammond describes AI as a system that replicates behaviors we associate with human intelligence — it’s designed to “think” and respond like we would. However, with every smart response or tailored suggestion, AI systems also have the potential to influence, shape, and even limit our choices.

CASMI’s Approach: Balancing Innovation with Caution

CASMI’s goal is to identify where AI might pose risks and establish protocols for safer deployment. Whether AI is used on an individual level or within large-scale social structures, it has the power to affect real lives and communities. CASMI’s researchers analyze potential harm and offer solutions to guide ethical AI practices that prioritize human well-being.

AI’s power to manage vast amounts of data helped speed the development of the first COVID-19 vaccines, which were created in record time. In such cases, AI was “another smart person in the room,” Hammond explains, enhancing human capabilities. But this intelligence has to be applied responsibly; when AI dominates rather than collaborates, it can lead to over-reliance and diminished human agency.

The Fine Line Between Assistance and Control

Consider something as simple as predictive text on your phone. While convenient, it can subtly start to dictate your responses. If you often select the auto-suggested responses, you may find yourself relying on AI to make decisions for you, altering the nature of your communication.

Although this might seem harmless, over time, dependence on AI could narrow our views. Since we each get tailored content, the news I see may be vastly different from yours, which could lead to fragmented perspectives. This personalization can hinder open communication and make it harder to find common ground with others.

Toward a Shared Vision of Safe AI

Hammond emphasizes that while AI has incredible potential, its integration into society must be carefully managed. CASMI’s efforts are an important step toward achieving AI that aligns with human values, supports individual freedom, and avoids unintended consequences. By creating a framework for safe AI, CASMI envisions a future where technology truly serves the public good.

As AI technology continues to evolve, Northwestern and CASMI remind us that responsible development should remain a priority. Their work is shaping a future where AI can amplify human potential without compromising individuality, privacy, or the ability to make personal choices.

Comments

Please log in to leave a comment.

No comments yet. Be the first to comment!