Nvidia Unveils Revolutionary Rubin AI Architecture to Power Next-Gen Computing

By admin | Jan 05, 2026 | 2 min read

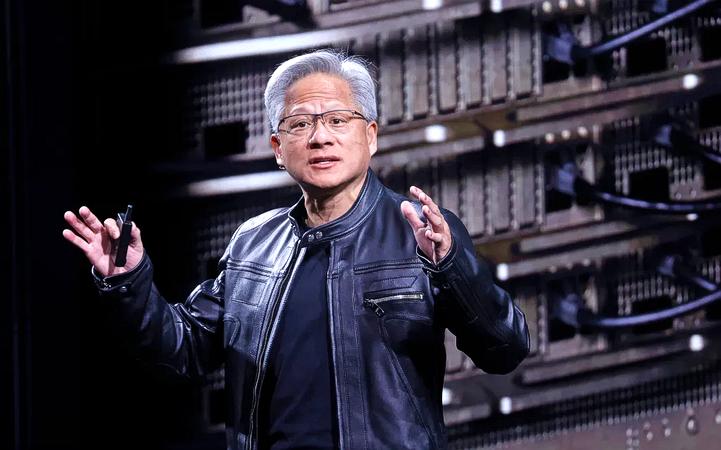

At the Consumer Electronics Show, Nvidia CEO Jensen Huang formally introduced the company’s new Rubin computing architecture, calling it the pinnacle of AI hardware. The architecture is already in production, with further scaling anticipated in the latter half of the year. “Vera Rubin is designed to address this fundamental challenge that we have: The amount of computation necessary for AI is skyrocketing,” Huang stated to attendees. “Today, I can tell you that Vera Rubin is in full production.”

First unveiled in 2024, the Rubin architecture represents the latest outcome of Nvidia’s accelerated hardware development cadence, a cycle that has propelled the company to become the world’s most valuable corporation. This new architecture succeeds the Blackwell platform, which itself followed the Hopper and Lovelace architectures.

Rubin chips are already scheduled for deployment by almost every major cloud provider, featuring prominent partnerships with Anthropic, OpenAI, and Amazon Web Services. Rubin systems will also power HPE’s Blue Lion supercomputer and the forthcoming Doudna supercomputer at Lawrence Berkeley National Lab.

Named for astronomer Vera Florence Cooper Rubin, the architecture integrates six distinct chips engineered to work together. While the Rubin GPU is the central component, the design also tackles increasing bottlenecks in storage and interconnection through enhancements to the Bluefield and NVLink systems, respectively. It additionally introduces a new Vera CPU, built for agentic reasoning.

Highlighting the benefits of the upgraded storage, Nvidia’s senior director of AI infrastructure solutions, Dion Harris, noted the escalating memory demands related to caching in contemporary AI systems. “As you start to enable new types of workflows, like agentic AI or long-term tasks, that puts a lot of stress and requirements on your KV cache,” Harris explained to reporters, referring to the memory system AI models use to condense inputs. “So we’ve introduced a new tier of storage that connects externally to the compute device, which allows you to scale your storage pool much more efficiently.”

As anticipated, the architecture also marks a substantial leap in both speed and power efficiency. Nvidia’s testing indicates that Rubin will perform three and a half times faster than the prior Blackwell architecture in model-training tasks and five times faster in inference tasks, achieving up to 50 petaflops. The new platform also delivers eight times more inference compute per watt.

These advancements from Rubin arrive during fierce competition to construct AI infrastructure, a landscape where AI labs and cloud providers are racing to secure both Nvidia chips and the facilities needed to operate them. During an earnings call in October 2025, Huang projected that between $3 trillion and $4 trillion will be invested in AI infrastructure over the coming five years.

Comments

Please log in to leave a comment.

No comments yet. Be the first to comment!